Emotion AI: A Leader’s Guide to Use Emotional Intelligence for Effective AI Adoption and Change Management

Just as in every other change process, effective AI adoption will be driven by emotions. Not by the technology itself, not by mandates from above, but by how people feel about what AI means for their work, their identity, and their future.

The Hidden Emotional Landscapes

Most organizations are approaching AI adoption as a technical challenge. They invest in tech, run training sessions, and wonder why adoption remains patchy. But watch closely, and you’ll see the real story unfold in break rooms and Slack channels, in the hesitation before someone admits they don’t understand something, in the defensive posture of an expert whose decades of knowledge suddenly feels threatened.

I recently had a zoom coffee with a colleague, let’s call her Sarah: a brilliant business leader who’s been with her company for fifteen years. “I should already know this,” she confessed, her voice barely above a whisper. “Everyone else seems to get it. I feel like I’m falling behind.” This wasn’t a skills gap—Sarah is one of our sharpest minds. This was shame, that powerful emotion that makes us smaller, quieter, less likely to ask for help.

Meanwhile, Michael, our Director of Marketing, can barely contain his enthusiasm. “This is incredible!” sharing yet another AI experiment. But when I asked even AI enthusiasts privately about their implementation timelines, their excitement revealed a shadow: “Honestly? I’m terrified we’re going to mess this up. What if we automate the wrong things? What if we lose what makes us… us?”

These emotions—shame, fear, excitement, curiosity—aren’t side effects of AI adoption. They’re the primary forces shaping whether AI strengthens or fractures your organization.

Why AI Feels Different

This isn’t our first technology rodeo. We’ve navigated the shift to cloud computing, the mobile revolution, the social media transformation. But AI hits differently, and it hits deeper.

When social media emerged, you could choose whether to participate. When new software rolled out, you could often delay adoption until the bugs were worked out. AI doesn’t offer that luxury. It’s already in your phone’s autocomplete, your email’s smart replies, your customer service chatbots. Your employees aren’t encountering AI for the first time in your training session—they’re bringing a whole constellation of feelings about AI from their daily lives.

More importantly, AI touches something fundamental: our sense of competence and purpose. When a tool can write, analyze, create, and problem-solve, it forces us to confront uncomfortable questions. If AI can do what I do, what’s my value? If my expertise can be automated, who am I professionally?

These aren’t questions you can answer with a training manual.

This all adds up to a perfect storm of uncertainty. The questions are too big. The next steps are beyond any precedent. The implications are too vast. In this storm, we’re losing our sense of direction, we can’t see the road signs. And our brains treat that as danger and trigger protective patterns – which also happen to inhibit innovation and change.

The Grief No One Talks About

Perhaps the most overlooked emotion in AI adoption is grief: the deep sadness that comes with loss, even when that loss might ultimately lead to something better.

I think of a coaching client I spoke with recently, let’s call him Ramesh. A fellow C-level leader with a lifetime of experience “reading the tea leaves” of meta-trends and able synthesize vast amounts of information into actionable insights. When he first saw AI do in minutes what took him days, he didn’t feel relief. He felt a profound sense of loss. “It’s like watching your life’s work become a commodity,” he told me. “All those years of developing my expertise, and now anyone can do it with a prompt.”

While I think it will be many years before AI can see insights the way he does, this grief is real and deserves acknowledgment. When we rush past it, when we cheerfully insist that AI will “free you up for more strategic work,” we invalidate a fundamental human experience. People need space to mourn the changing nature of expertise before they can embrace new opportunities.

We’re not managing technical change—we’re managing identity evolution

The Trust Paradox

One of the strangest dynamics I’ve observed is what I call the trust paradox of AI. People simultaneously overtrust and undertrust AI, often in the same conversation.

They’ll accept AI-generated analysis without questioning the methodology, then refuse to use AI for tasks it handles masterfully because “a human should do that.” They’ll share intimate thoughts with ChatGPT while fearing their company’s AI tools are spying on them. They’ll rely on AI for critical decisions while dismissing its ability to help with routine tasks.

This paradox stems from emotional confusion. Without clear frameworks for when and how to trust AI, people default to their feelings in the moment—and those feelings are often contradictory.

As a result, people and organizations are using AI and EI wrong. They’re giving creative, generative, emotionally-laden work to AI because it seems easier – even though that’s the work humans do better. We need to use the right tool for the job, and that is going to require rebuilding and strengthening trust between people.

Work with AI as a tool for particular types of thinking, partner with humans for meaning.

Emotional Safety Is the Baseline for Effective AI Adoption

Trying to conquer these emotions undermines the lesson. Engaging emotions is the way we leverage emotional intelligence.

That often starts by naming the emotions in the room. That builds trust because it tells people they have permission to be human. Consider these principles that honor both the technology and the emotions:

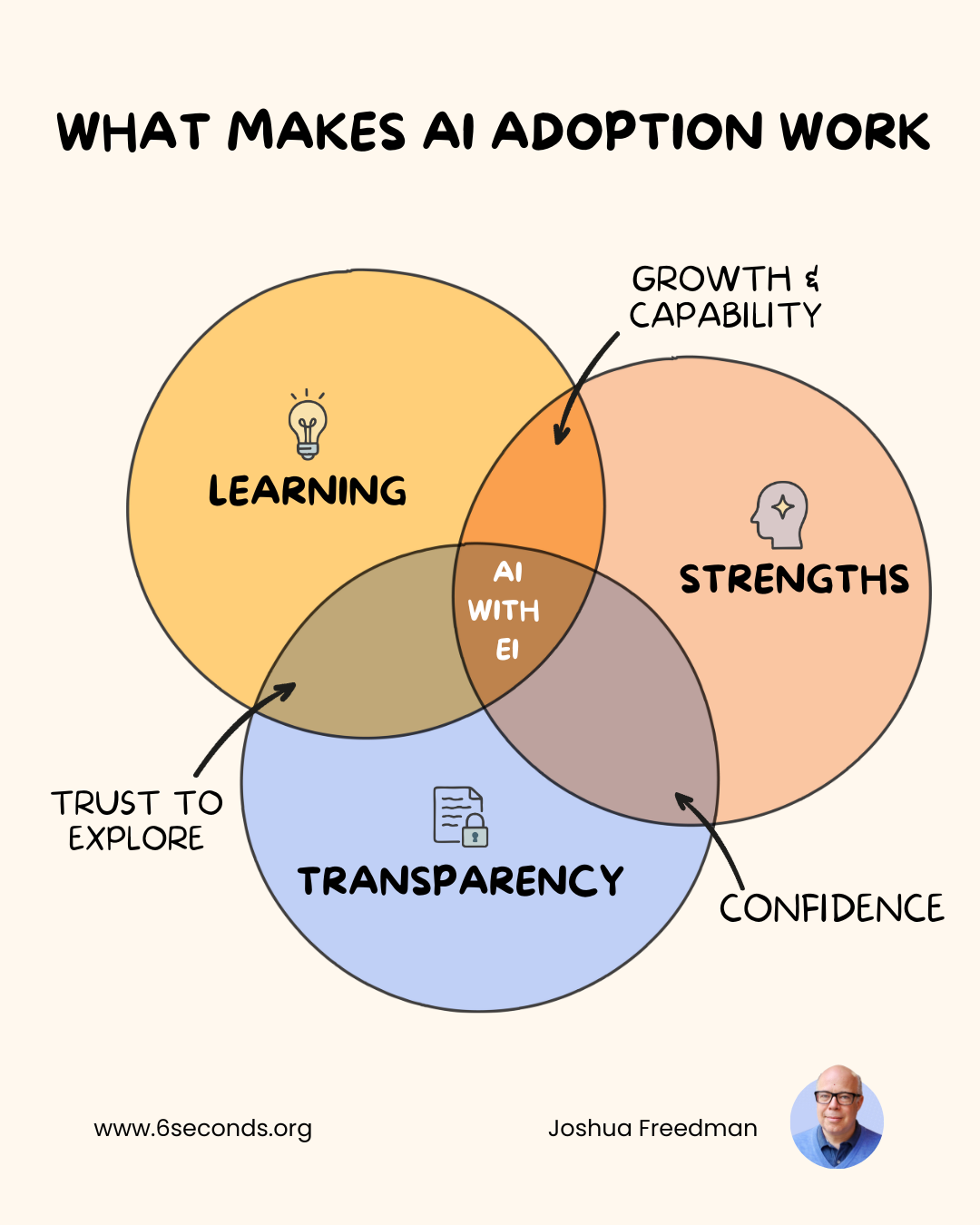

Transparency becomes trust. Explain not just what AI tools we’re using, but why. Share openly about data handling, decision-making processes, and boundaries. When people understand the guardrails, fear diminishes.

Learning needs low stakes. Create “AI playground” sessions where people can experiment without consequences. No productivity metrics, no judgment, just curiosity and exploration. Amazing things happen when shame leaves the room.

Spotlight human strengths. Every AI implementation comes with an explicit discussion of the human capabilities it enhances rather than replaces. For example, automate report generation, and celebrate how this frees analysts to do what they do best: identify patterns, question assumptions, and tell the stories behind the numbers.

The Identity Evolution

What we’re really managing isn’t technical change—it’s identity evolution. People aren’t just learning new tools; they’re reimagining their professional selves. If they don’t, they are trying to step into a bold new direction, following the tired old templates; as Marshall Goldsmith wrote, What Got You Here Won’t Get You There.

This process of redefinition is partly a practical process, for example, realizing how AI tools can be helpful, learning to make them work. It’s largely an emotional process, coming to see ourselves in new ways – and creating a new story of our value and identity.

Earlier, I wrote about Ramesh and his grief from lost identity. His grief is about giving up his old way of creating value; an expertise of knowing, refined through decades of work. In our conversation, I asked him to consider a different perspective:

Is your value in what you know and the report you produce – or in knowing what to ask, and why that matters?

The same question applies to me as an author. In some ways, AI is going to replace me. It’s faster, cheaper, and better organized.

But it doesn’t understand what people need to hear. I have a unique human ability to listen, not just to what people say, but to what’s unsaid – empathy. That perception tells me what to write, but more importantly: why.

This offers a shift in perspective. If we define ourselves by “what we do,” then AI can replace us. If our identity is built on “why we do,” then it can’t. This is a profound redefinition. It requires emotional support, time for reflection, and repeated reinforcement. You can’t rush identity evolution.

AI touches something fundamental: our sense of competence and purpose. When a tool can write, analyze, create, and problem-solve, it forces us to confront uncomfortable questions. If AI can do what I do, what’s my value?

If my expertise can be automated, who am I professionally?

The Connection Imperative

As AI becomes more prevalent, a troubling pattern is emerging; did you see the Upwork survey which found people trust AI more than people – and prefer talking to AI?

People are turning to AI for emotional support. They’ll ask AI for advice about interpersonal conflicts instead of talking to their manager or a coach. While GenAI is endlessly patient, and can offer useful reflection, there’s growing evidence that the most widely adopted GenAI tools are not well designed for this role.

This “confiding in AI” behavior isn’t all bad, but it’s dangerous if we let AI become a proxy for quality human connection. AI can recognize emotional patterns and suggest strategies, but it can’t actually care, and it has no intuition. It can’t provide the co-regulation that happens when one nervous system calms another. It can’t offer the deep recognition that comes from being truly seen by another person.

We need to be explicit: work with AI as a tool for particular types of thinking, partner with humans for meaning. Protect and even expand spaces for human connection—profound dialogue, meals together, walking meetings, mentoring, brainstorming. Keep the balance: The more AI we integrate, the more human connection we need to cultivate.

If we define ourselves by “what we do,” then AI can replace us.

If our identity is built on “why we do,” then it can’t.

Moving Forward with Emotional Intelligence

The organizations that will thrive with AI aren’t the ones relying on sophisticated technology or the biggest AI budgets. They’re the ones that approach AI adoption with emotional intelligence.

This means picking up the emotional clues, and getting the underlying meaning. Recognizing that the project manager who seems resistant might actually be grieving. The analyst asking endless questions might be managing anxiety through information-gathering. The executive pushing for rapid adoption might be fearful of being left behind.

It means creating change processes that honor the full spectrum of human experience. Yes, we need clear implementation plans and success metrics. But we also need space for uncertainty, forums for processing fear, and celebration of the courage it takes to evolve.

Most importantly, it means remembering that we’re not just adopting AI—we’re evolving what it means to work, to contribute, to matter in an organization. That’s not a technical challenge. It’s a deeply human journey that deserves to be treated as such.

Learning from Our Own Emotional Journeys with AI

As I write this, I’m aware of my own evolving relationship with AI. This article itself is a collaboration—my thoughts and experiences shaped by human insight, AI helping me organize and refine them. There’s something both humbling and empowering about that partnership.

I’ve felt the full range of emotions I’ve described here. The shame of not understanding something immediately. The fear that my skills might become obsolete. The annoyance of an AI tool telling me it fixed my code, only to see the same error recurring. The excitement of discovering new capabilities. The overwhelm of feeling behind even though I’m feeling more productive now than ever before. The grief of watching familiar ways of working disappear.

But mostly, I feel curious. Curious about what becomes possible when we stop pretending that technological change is emotionally neutral. Curious about the cultures we can build when we honor both artificial intelligence and emotional intelligence. Curious about who we become when we’re augmented but not replaced.

The AI revolution isn’t “someday,” we’re in it – and it now has so much momentum that it’s here to stay. We suffer if we try to hold back the tide, but we can choose how we feel about the change – and as leaders, that changes how others feel… which changes the outcomes.

The future of work isn’t about choosing between humans and AI. It’s about creating workplaces where both can thrive, where technology amplifies human strength rather than diminishing it, where efficiency gains don’t come at the cost of connection.

That future starts with a simple recognition: AI adoption challenges are emotional before they’re technical. When we acknowledge this truth and lead accordingly, transformation becomes not just possible, but profoundly human.

For leaders ready to navigate the emotional landscape of AI adoption, remember: Your team doesn’t need you to have all the answers about AI. They need you to create space for all the feelings that come with change. Start there, and the rest will follow.

For more on how to use emotional intelligence to win at transformation and change, check out:

How to Stop Failing at Change

How does EQ support transformation?

- Leading Change in Times of Uncertainty: Why Managing Transitions Matters More Than Strategy - December 28, 2025

- Emotion AI: A Leader’s Guide to Use Emotional Intelligence for Effective AI Adoption and Change Management - December 2, 2025

- Requesting Trust: 5 Practical Steps to Repair and Increase Trust - November 20, 2025